Research Staff

David serves as the principal investigator for the NSF-funded components of the project, ensuring both administrative and technical coherence across its various work streams. He also contributes as a mentor in programs such as Google Summer of Code (GSoC), IRIS-HEP, and others.

email: David.Lange@princeton.edu

Education: PhD Physics, University of California, Santa Barbara (1999).

Institution: Princeton/CERN

Research Staff

Vassil provides overall project leadership and strategic vision. He is responsible for all aspects of the project not explicitly delegated to other team members.

email: vvasilev@cern.ch

Education: PhD Computer Science, University of Plovdiv “Paisii Hilendarski”, Plovdiv, Bulgaria (2015).

Institution: Princeton/CERN

Assoc. Prof.

Alexander is a long-term Google Summer of Code mentor. He helps the project with maintaining parts of the continuous integration systems and offers technical guidance in the area of automatic differentiation in Computer Graphics.

email: apenev@uni-plovdiv.bg

Education: PhD Computer Science, University of Plovdiv “Paisii Hilendarski”, Plovdiv, Bulgaria (2013)

Institution: University of Plovdiv “Paisii Hilendarski”

Senior Assistant Prof.

Martin has research interest in the area of the compiler construction, analysis of complex software systems and bioinformatics. He has obtained his PhD in 2020 in the University of Plovdiv “Paisii Hilendarski” where he continued to develop his academic career.

email: mvassilev@uni-plovdiv.bg

Education: PhD Computer Science, University of Plovdiv “Paisii Hilendarski”, Plovdiv, Bulgaria (2020)

Institution: University of Plovdiv “Paisii Hilendarski”

Senior Scientific Software Developer

After working with the CMS experiment for his PhD in particle physics, Jonas joined CERN in 2021 to work on the ROOT project. He is takes care of the statistical analysis libraries in ROOT, such as RooFit and Minuit 2, as well as the ROOT Python interface. His other academic interests include differential programming and financial markets.

email: jonas.rembser@cern.ch

Education: PhD Particle Physics, Ecole Polytechnique, Paris, France (2020)

Institution: CERN

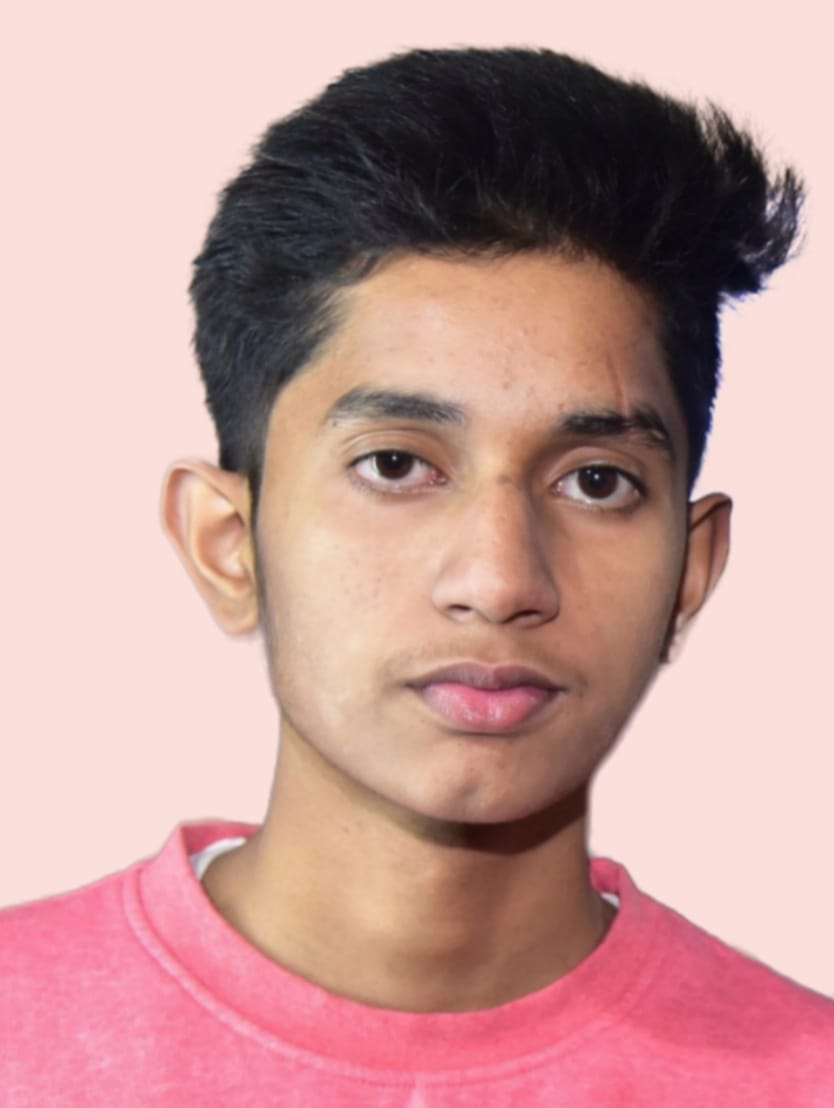

Research Intern at CERN

email: aaronjomyjoseph@gmail.com

Education: B. Tech in Computer Science, Manipal Institute of Technology, Manipal, India

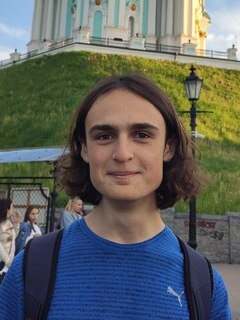

Google Summer of Code 2025 Contributor

email: petro.zarytskyi@gmail.com

Education: Applied Mathematics, Taras Shevchenko National University of Kyiv, Ukraine, 2021-present

IRIS-HEP Fellow

email: maksym.andriichuk@icloud.com

Education: Mathematics, University of Wuerzburg, Germany

Open Source Contributor

email: matthew.c.barton@hotmail.co.uk

Education: PhD Theoretical Nuclear Physics, University of Surrey (2018)

Google Summer of Code 2024 Contributor

email: sahilpatidar60@gmail.com

Education: Computer Science and Engineering, Vindhya Institute of Technology, India

Google Summer of Code 2024 Contributor

email: christinakoutsou22@gmail.com

Education: Integrated Master’s in Electrical and Computer Engineering, Aristotle University of Thessaloniki, Greece

Intern

email: vipulcariappa@gmail.com

Education: B.Tech in Computer Science Engineering, Ramaiah University of Applied Sciences, India

Open Source Contractor at QuantStack, working on xeus-cpp and the surrounding Jupyter and LLVM ecosystem

email: anutosh.bhat@quantstack.net

Education: Integrated Dual Degree (B.Tech & M.Tech) in Data Science & Biological Engineering, IIT Madras, India (2024)

Google Summer of Code 2025 Contributor

email: adityapand3y666@gmail.com

Education: Bachelor of Technology, Birla Institute of Technology, Mesra, India

Google Summer of Code 2025 Contributor

email: mozil.petryk@gmail.com

Education: Bachelor of Computer Science, Ukrainian Catholic University, Ukraine

CERN Summer student 2025

email: gbistrev@uni-sofia.bg

Education: Nuclear and particle physics , Sofia University , St. Kliment Ohridski , Bulgaria

Google Summer of Code 2025 Contributor

email: lijiayang404@gmail.com

Education: Bachelor of Computer Science, Shanghai University, China

Google Summer of Code 2025 Contributor

email: delatorregonzalezsalvador@gmail.com

Education: Mathematics and Computer Engineering, University of Seville, Spain

Google Summer of Code 2025 Contributor

email: rohan.timmaraju@gmail.com

Education: B.S. Computer Science, Columbia University

Google Summer of Code 2025 Contributor

email: abdelrhman.elrawy1@gmail.com

Education: Master of Applied Computing, Wilfrid Laurier University, Canada

Google Summer of Code 2025 Contributor

email: abhinavdnpiasb@gmail.com

Education: Computer Science & Engineering, Bachelor of Technology, Indian Institute of Technology(IIT) Indore, India

Google Summer of Code 2025 Contributor

email: aditij0205@gmail.com

Education: B.Tech in Computer Science and Engineering (AIML), Manipal Institute of Technology, Manipal, India

Senior research engineer at the Barcelona Supercomputing Center

email: pavlo.svirin@cern.ch

Education: PhD Computer Science, National Technical University of Ukraine, 2014

Technical Writer, Google Season of Docs 2023 contributor

email: 9x3qly27@anonaddy.me

Education: Bachelors Degree in Science (BS)

GSoC 2024 Contributor

email: shaharechaitanya3@gmail.com

Education: B.Tech in Mechanical Engineering, National Institute of Technology Srinagar, India

GSoC 2024 Contributor

email: delta_atell@protonmail.com

Education: Mathematics, University of Wuerzburg, Germany

Research Intern at CERN, Google Summer of Code 2023 Contributor

email: vaibhav.thakkar.22.12.99@gmail.com

Education: Electrical Engineering and Computer Science, Indian Institute of Technology, Kanpur, India

GSoC 2024 Contributor

email: isaacmoralessantana@gmail.com

Education: Computer Engineering, University of Granada, Spain

Google Summer Of Code 2024 Contributor

email: manasi.riya2003@gmail.com

Education: B.Tech in Computer Science Engineering, Graphic Era University, India

Research Intern, GSoC 2024 Contributor

email: khushiyant2002@gmail.com

Education: B.Tech in Computer Science and Engineering, G.G.S.I.P.U, India

GSoC 2024 Contributor

email: atharun05@gmail.com

Education: B.Tech in Computer Science and Engineering, National Institute of Technology, Tiruchirapalli, Tamil Nadu, India

Open Source Contributor

email: shreyasatre16@gmail.com

Education: B.Tech in Electronics and Telecommunications Engineering, Veermata Jijabai Technological Institute, Mumbai, India | Masters in Computer Science, Louisiana State University, Baton Rouge, United States

IRIS-HEP Fellow

email: acherjan@mail.uc.edu

Education: Computer Sciences B.S. + M.S , University of Cincinnati, OH

Research Staff

email: ioana.ifrim@cern.ch

Education: MPhil Advanced Computer Science, University of Cambridge (2018)

Google Summer of Code 2023 Contributor

email: ssmit1607@gmail.com

Education: B.Tech in Computer Engineering, Veermata Jijabai Technological Institute, Mumbai, India

Open Source Contributor

email: rishabhsbali@gmail.com

Education: B.Tech in Computer Engineering, Veermata Jijabai Technological Institute, Mumbai, India

Research Intern, Google Summer of Code 2022 former contributor

email: anubhabghosh.me@gmail.com

Education: Computer Science and Engineering, Indian Institute of Information Technology, Kalyani, India

Google Summer of Code 2023

email: ksunhokim123@gmail.com

Education: B.S. in Computer Science, University of California San Diego

Google Summer of Code 2023 Contributor

email: krishnanarayanan132002@gmail.com

Education: B.Tech in Electronics and Telecommunications, Veermata Jijabai Technological Institute, Mumbai, India

Open Source Contributor

email: daemondzh@gmail.com

Education: Computer Sciences B.E, Tsinghua University

Research Intern at CERN

email: garimasingh0028@gmail.com

Education: B. Tech in Information Technology, Manipal Institute of Technology, Manipal, India

Research Intern at CERN

email: kundubaidya99@gmail.com

Education: B. Tech in Computer Science and Engg., Manipal Institute of Technology, Manipal, India

GSoC student 2023

email: me@syntaxforge.net

Education: PhD Student in Computer Science, Indiana Unveristy

Google Season of Docs 2022

email: sara.bellei.87@gmail.com

Education: PhD in Physics, Politecnico University of Milan, Italy (2017)

IRIS-HEP Fellow

email: somayyajula@wisc.edu

Education: Computer Sciences B.S., University of Wisconsin-Madison

Research Intern, Google Summer of Code 2022 former contributor

email: jun@junz.org

Education: Software Engineering, Anhui Normal University, WuHu, China

Google Season of Docs 2022

email: rohitrathore.imh55@gmail.com

Education: Mathematics & Computing, Birla Institute of Technology, Mesra, India

Google Summer of Code 2022

email: hmanishkausik@gmail.com

Education: B.Tech and M.Tech in Computer Science and Engineering(Dual Degree), Indian Institute of Technology Bhubaneswar

Principal Product Manager at Microsoft Azure Core Engineering

email: tapaswenipathak@gmail.com

Education: B.Tech in Computer Science, Indira Gandhi Delhi Technical University for Women, 2014

Google Summer of Code Contributor 2022

email: mizvekov@gmail.com

Education: Computer Science

IRIS-HEP Fellow

email: partharora99160808@gmail.com

Education: B.Tech in Computer Science, USICT, Guru Gobind Singh Indraprastha University, New Delhi, India

Google Summer of Code Student 2021

email: aua2@illinois.edu

Education: University of Illinois at Urbana-Champaign, Grainger College of Engineering

Research Intern

email: purva.chaudhari02@gmail.com

Education: Computer Science, Vishwakarma Institute of Technology

Google Summer of Code Student 2020

email: gargvaibhav64@gmail.com

Education: Computer Science, Birla Institute of Technology and Science, Pilani, India

Google Summer of Code Student 2020

email: camolezi@usp.br

Education: Computer Engineering, University of São Paulo, Brazil

Google Summer of Code Student 2020

email: r.intval@gmail.com

Education: Mathematics and Computer Science, Voronezh State University, Russia