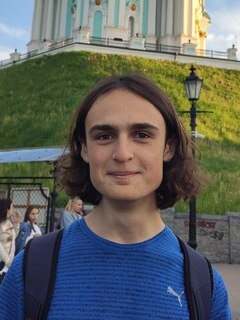

Google Summer of Code 2025 Contributor

Google Summer of Code 2025 Contributor

email: petro[dot]zarytskyi[at]gmail[dot]com

Education: Applied Mathematics, Taras Shevchenko National University of Kyiv, Ukraine, 2021-present

Ongoing project:

Improve automatic differentiation of object-oriented paradigms using Clad

Clad is a Clang plugin enabling automatic differentiation (AD) for C++ mathematical

functions by modifying the abstract syntax tree using LLVM’s compiler capabilities.

It integrates into existing codebases without modifications and supports forward and

reverse mode differentiation. Reverse mode is efficient for Machine Learning and

inverse problems involving backpropagation.

Reverse mode AD requires two passes: forward pass stores intermediate values, reverse

pass computes derivatives. Currently, Clad only supports storing trivially copyable

types for function call arguments, limiting support for C-style arrays and non-copyable

types like unique pointers, constraining Object-Oriented Programming usage.

The project aims to enhance Clad’s capability to store intermediate values for non-copyable

types. One of the challenges lies in determining which expressions are modified in nested

functions, potentially requiring run-time memory location tracking, which can be inefficient.

The solution involves enhancing To-Be-Recorded (TBR) analysis, currently limited with

poor nested function call support and no pointer reassignment handling. Improved TBR

analysis will enable predictable memory handling, generating optimal code, and

supporting both non-copyable types and efficient storage of copyable structures.

Project Proposal: URL

Mentors: Vassil Vassilev, David Lange

Completed project project:

Optimizing reverse-mode automatic differentiation with advanced activity-analysis

Clad is an automatic differentiation clang plugin for C++. It automatically

generates code that computes derivatives of functions given by the user.

Clad can work in two main modes: forward and reverse. The reverse mode involves

computing the derivative by applying the chain rule to all the elementary operations

from the result to the argument. It turns out to be more

efficient when there are more dependent output variables than independent

input variables (e.g. calculating a gradient). However, the approach to blindly

compute the derivatives of all the intermediate variables obviously produces

code that does a lot of unnecessary calculations. With advanced activity analysis,

the variables which are not used to compute the result can be found and removed,

increasing the time- and memory- efficiency of the output code.

Project Proposal: URL

Mentors: Vassil Vassilev, David Lange